Publications

Bredenberg, C., Simoncelli, E., & Savin, C. (2020). Learning efficient task-dependent representations with synaptic plasticity. Advances in Neural Information Processing Systems, 33.

Robinson, J. L., Lee, E. B., Xie, S. X., Rennert, L., Suh, E., Bredenberg, C., ... & Hurtig, H. I. (2018). Neurodegenerative disease concomitant proteinopathies are prevalent, age-related and APOE4-associated. Brain.

Bredenberg, C. (2017). Examining heterogeneous weight perturbations in neural networks with spike-timing-dependent plasticity (BPhil Thesis, University of Pittsburgh).

Presentations

Impression learning: Online predictive coding with synaptic plasticity. C Bredenberg, E P Simoncelli and C Savin. Computational and Systems Neuroscience (CoSyNe), Feb 2021. Abstract | Talk

Bredenberg C., Savin C., and Kiani R. (2020, February). Recurrent neural circuits overcome partial inactivation by compensation and relearning. Poster presentation, Cosyne 2020. Abstract | Poster

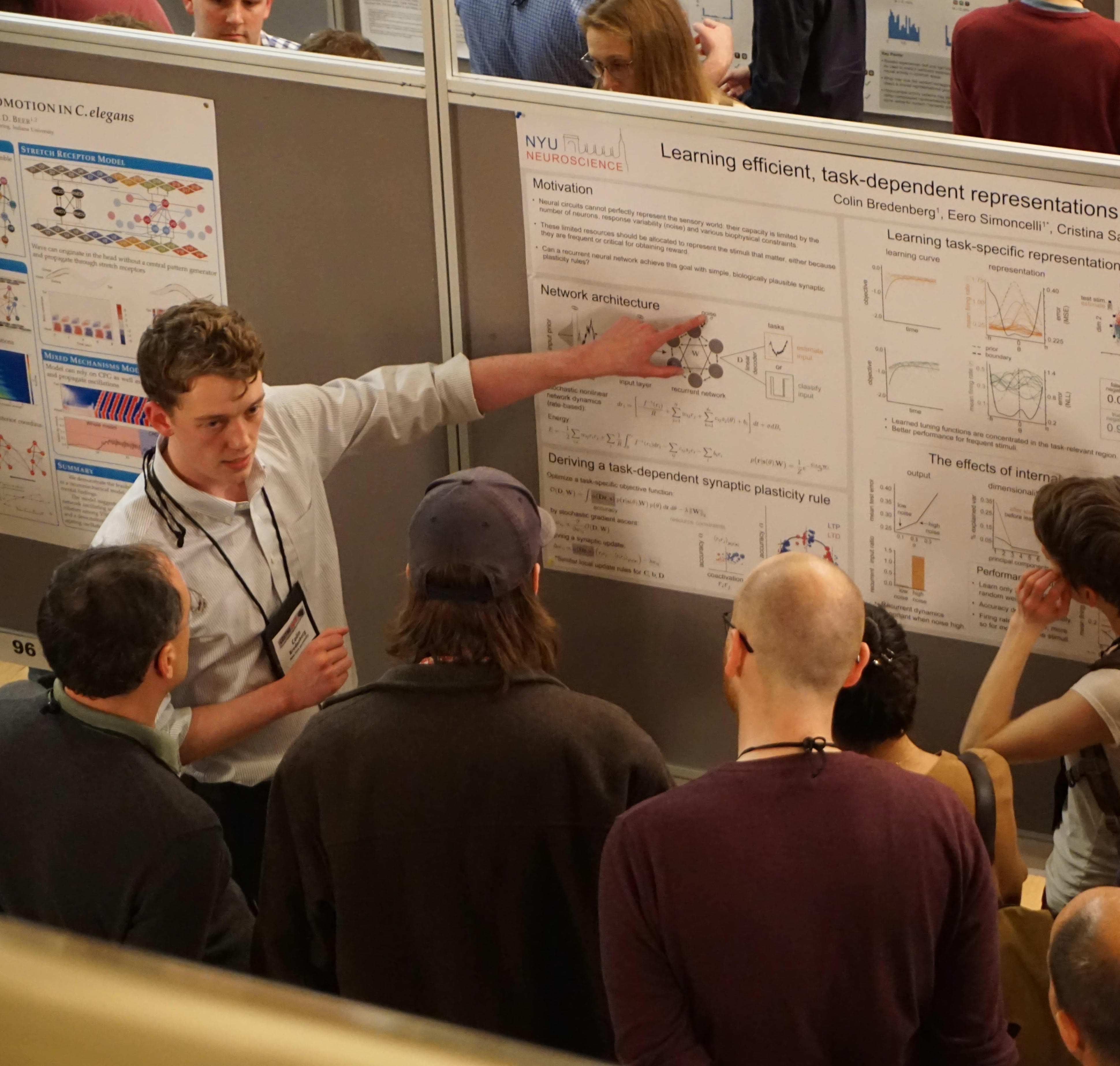

Bredenberg C., Simoncelli E. P., and Savin C. (2019, December). Learning efficient, task-dependent representations with synaptic plasticity. Poster presentation, NeuRIPS Workshop on Biological and Artificial Reinforcement Learning. Paper | Poster

Bredenberg C., Simoncelli E. P., and Savin C. (2019, February). Learning efficient, task-dependent representations with synaptic plasticity. Poster presentation, Cosyne 2019. Abstract | Poster

Bredenberg C., Doiron B. (2017, February). Examining weight perturbations in plastic neural networks. Poster presentation, Cosyne 2017. Abstract

Bredenberg C., Doiron B. (2016, October). Examining Variably Diffuse Weight Perturbations in Plastic Neural Networks. Poster presention, University of Pittsburgh’s Science 2016.

Suh E, Bredenberg C., Van Deerlin V. (2014, October). Screening for Mutations in Frontotemporal Degeneration with a Targeted Next Generation Sequencing Panel. Poster presentation, International Conference on Frontotemporal Dementia.